Portfolio

Imitative Navigator

2023-24

Summary: When applying reinforcement learning (RL) to robotic problems, it is often time-intensive to train a policy from scratch, since the sparse reward signal cannot be sampled efficiently. One solution is to “jump-start” the training by showing the agent how the task is “roughly” done, albeit in a suboptimal way. Given some prior knowledge, the agent can quickly sample the reward, and further fine-tune the policy.

For that I implemented an Imitation Learning algorithm, called AWAC (Advantage Weighted Actor Critic), to solve the problem in the context of spatial navigation. It significantly reduced the training time by demonstrations of an easy-to-implement policy (object-avoidance). It was the first instance that imitation leraning was applied to a spatial navigation problem. The algorithm can be applied to a wide range of RL problems.

Methods: Deep Reinforcement Learning (PyTorch), Numerical Simulation (NumPy), Robotic Simulator (Webots, Python), Computer Vision (OpenCV & pretrained CNNs), Classical Machine Learning.

GitHub repo: https://github.com/yyhhoi/ImiNav

Dual-Sequence Net

2021-2023

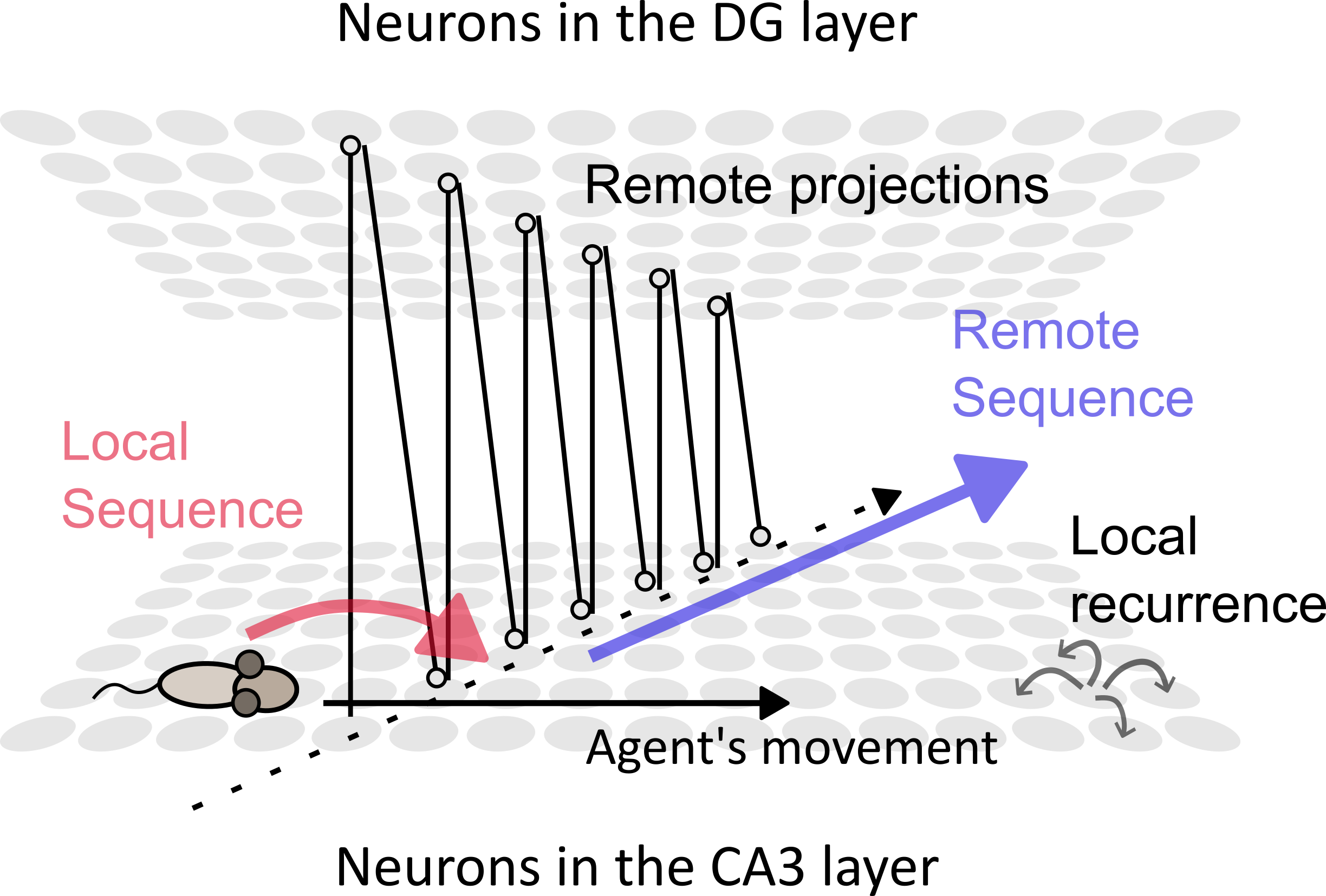

Summary: Human thinking process is inherently dual in nature: While we were chopping an onion (local/actual actions), we might “mind-wander” and think what if we wathced TV instead? (remote/virtual plans). To model such a process, we simulated a biological neural network to test our theory. We showed that sequential firing of neurons can support such dual coding, and published our results in eLife.

Methods: Mathematical Modelling of Complex Systems, Numerical Simulation (Python, C++), Classical Machine Learning, Synaptic Learning Rules, Time-series Analysis.

Publication: Yiu, Y.-H., & Leibold, C. (2023). A theory of hippocampal theta correlations accounting for extrinsic and intrinsic sequences. eLife, 12, RP86837. https://doi.org/10.7554/eLife.86837.4

Brain Data Science

2019-22

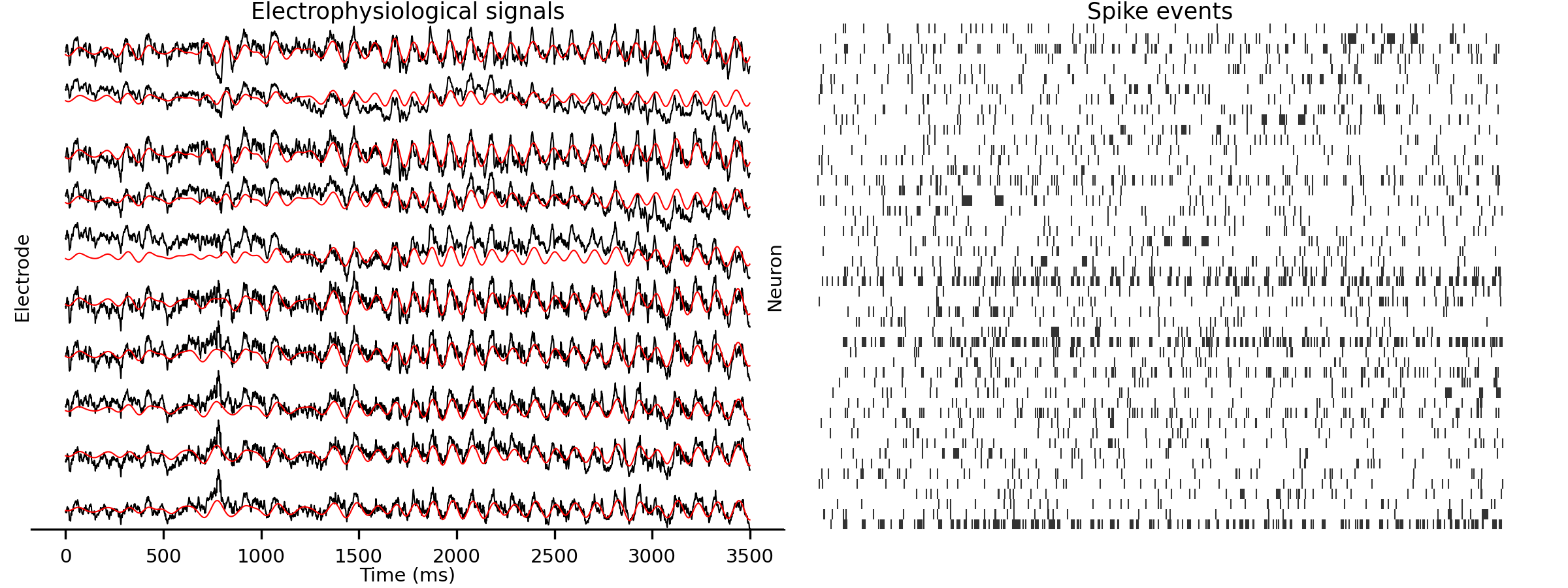

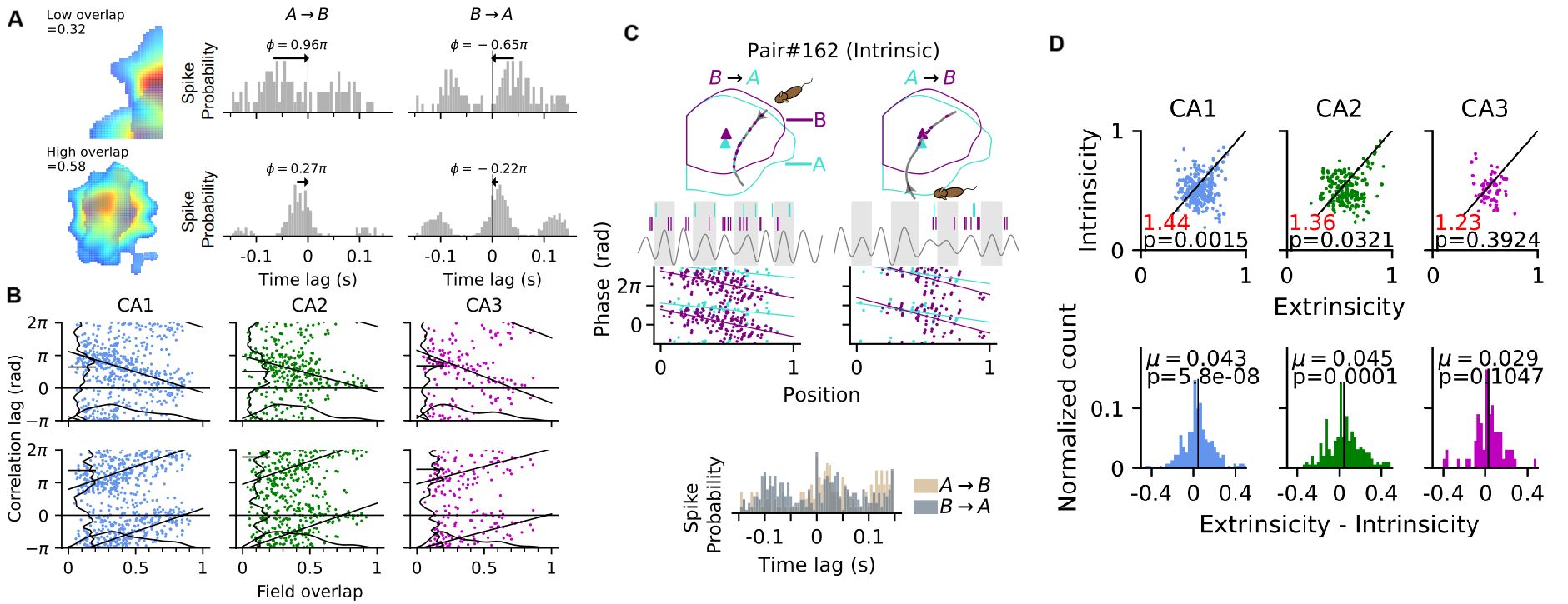

Summary: We proposed a network model of dual processes, but is it actually how our brain works? By analyzing the brain data of rodents recorded by microelectrodes, we were able to prove that such dual coding exists, and published our results in The Journal of Neurosciences.

Methods: Circular Statistics, Regression Analysis, Hypothesis Testing, Linear and Non-Linear Classifiers, Time-series Analysis, Bayesian Parameter Estimation.

Publication: Yiu, Y.-H., Leutgeb, J. K., & Leibold, C. (2022). Directional Tuning of Phase Precession Properties in the Hippocampus. The Journal of Neuroscience, 42(11), 2282–2297. https://doi.org/10.1523/JNEUROSCI.1569-21.2021

Latent Gait Patterns

2019

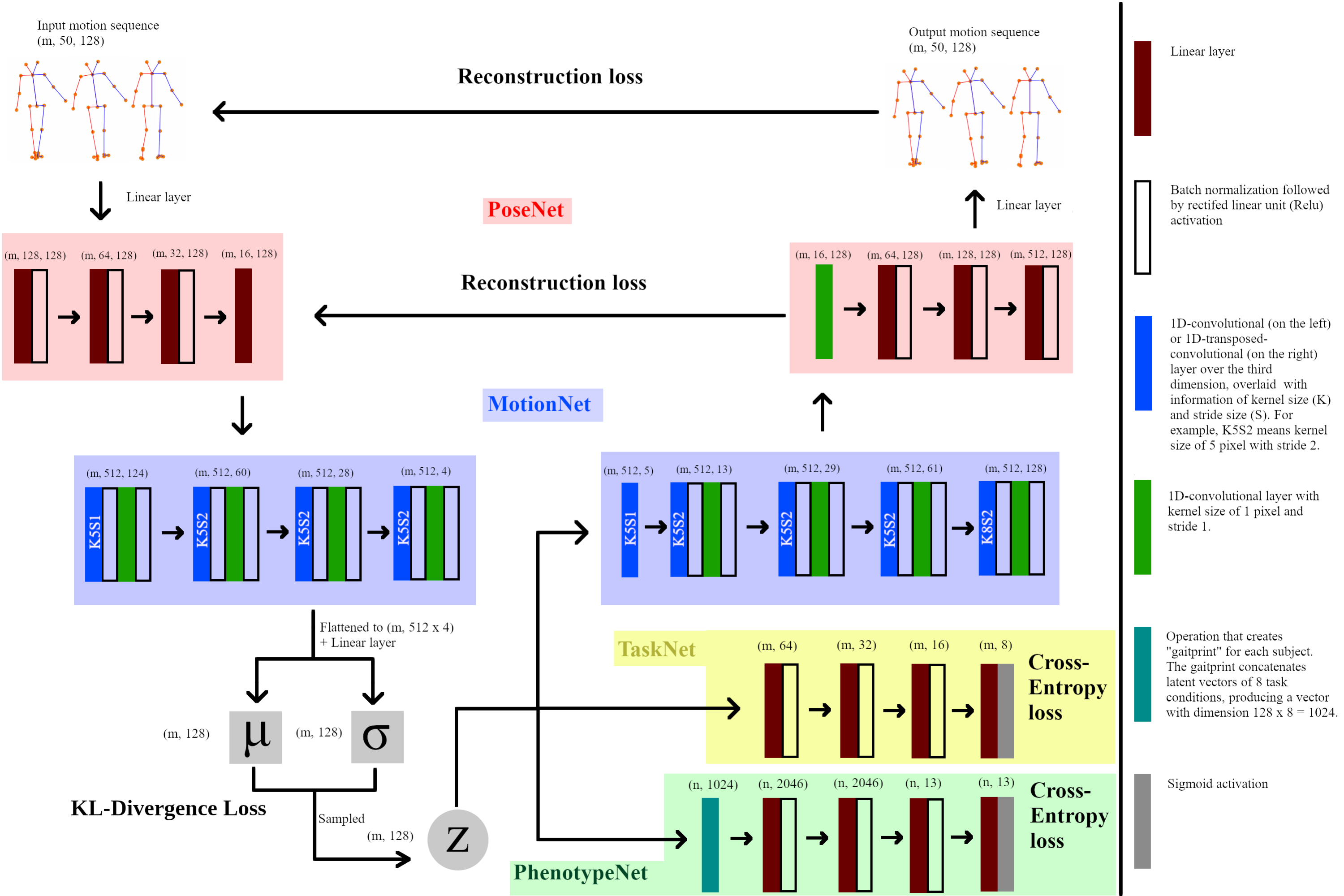

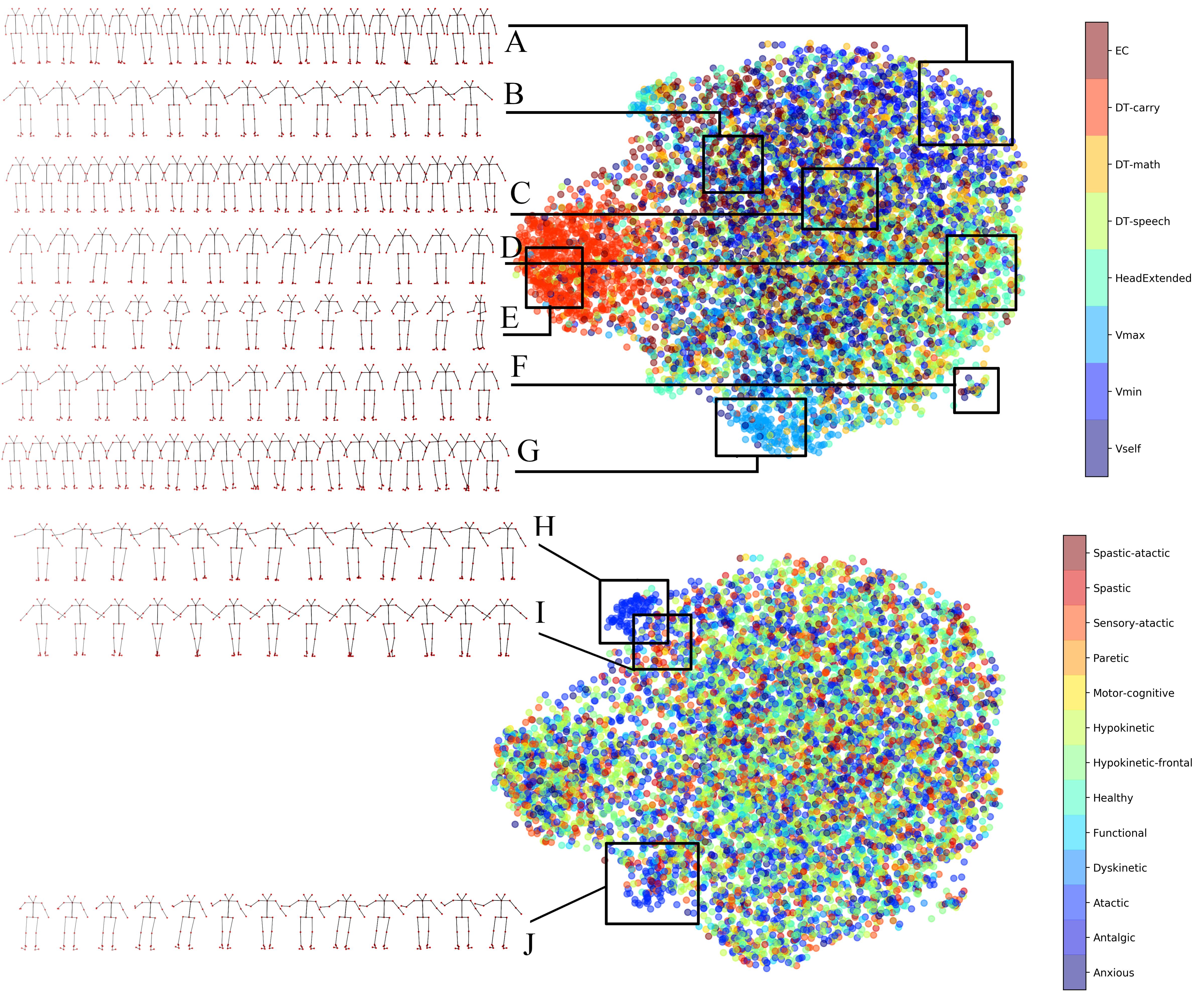

Summary: People with vertigo and balance disorders often exhibit abnormal gait patterns while walking, such as staggering or overly rigid motion. Here, we employed generative models such as Variational Autoencoders (VAEs) to extract the latent variables underlying these pathological walking patterns.

In cooperation with LMU clinics, we gained access to thousands of walking videos of patients. After training the VAEs on these videos, we found that pathological walking patterns were clearly clustered in the latent space. Such a representation can aid in video-based diagnosis without relying on clinical assessment.

Methods: Deep Learning (PyTorch), Computer Vision (OpenCV), Data Processing, Data Augmentation

Article: MSc Thesis link

GitHub repo: https://github.com/yyhhoi/gait

DeepVOG

2018-19

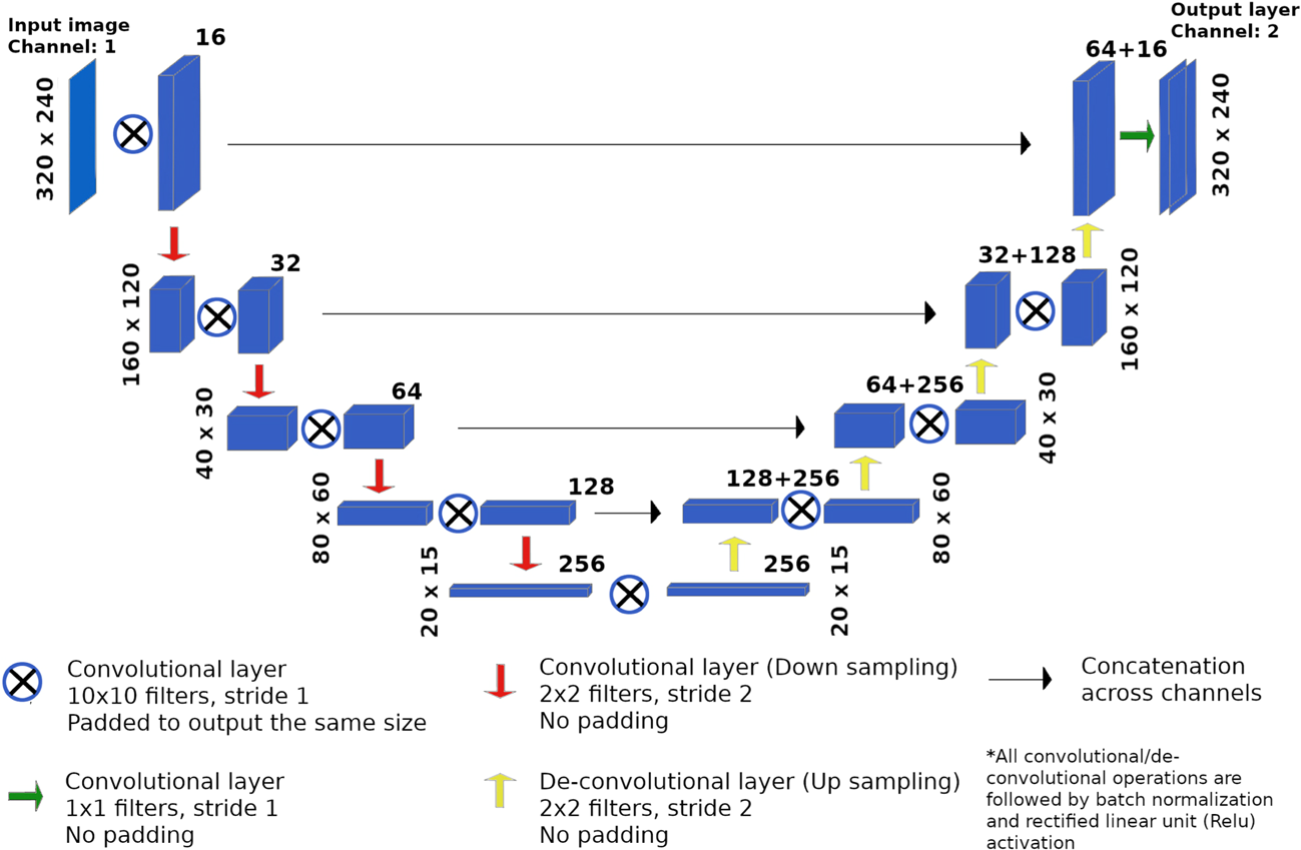

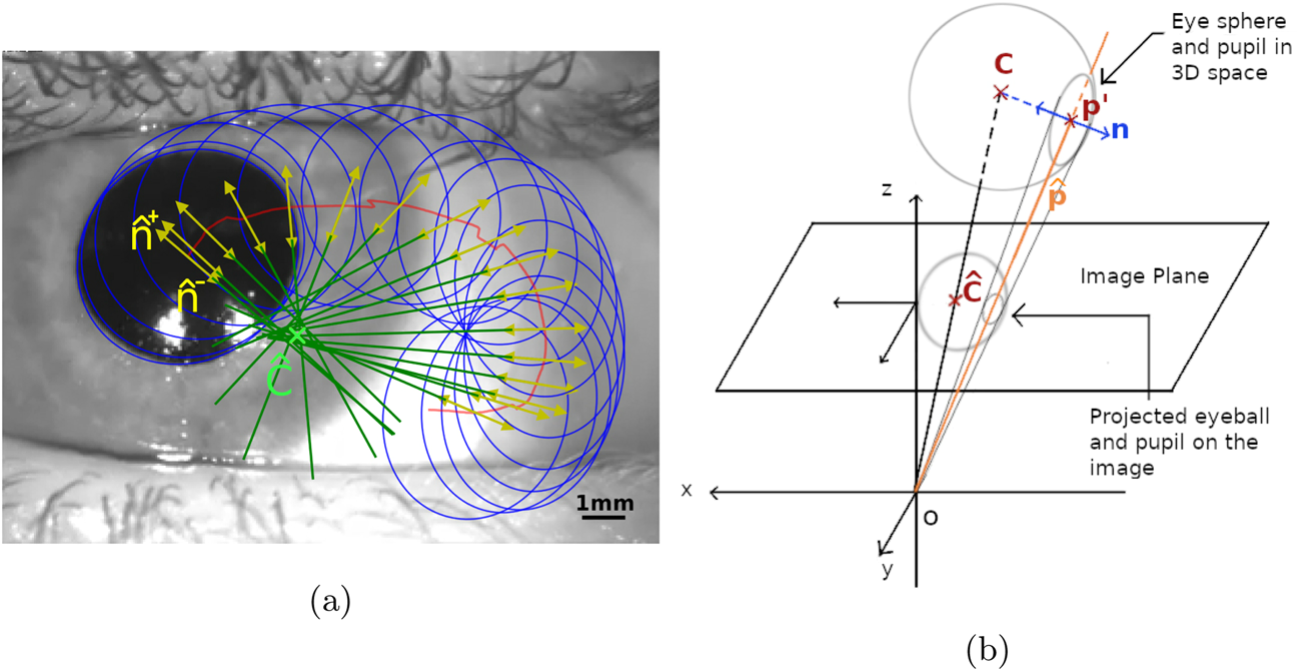

Summary: Conventional eye-tracking algorithms failed to detect eye movements in dark and noisy camera videos, such as when recorded inside an MRI machine. To solve the problem, we developed a deep-learning based eye tracking model for noisy camera feeds.

The network was trained on clinical eyetracking images provided by LMU clinics. It performs semantic segmentation on eye pupil area and fit a 3D eye-ball model to estimate the gaze. The network generalizes well to other data sets. A Docker image is available for easy deployment of the model.

Methods: Deep Learning (TensorFlow, Keras), Computer Vision (OpenCV), Data Augmentation (Keras), Synthetic Data Generation (Blender), Data Processing.

Publication: Yiu, Y.-H., Aboulatta, M., Raiser, T., Ophey, L., Flanagin, V. L., zu Eulenburg, P., & Ahmadi, S.-A. (2019). DeepVOG: Open-source pupil segmentation and gaze estimation in neuroscience using deep learning. Journal of Neuroscience Methods, 324, 108307. https://doi.org/10.1016/j.jneumeth.2019.05.016

GitHub repo: https://github.com/pydsgz/DeepVOG